Opinion: The problem with generative AI

The problem

If you’re like me, you’ve been staring increasingly at AI-generated art. Not by choice, but because of the course the “market” is driving us toward. We will, inevitably, all be subject to it. But I’ve been noticing a problem with AI art—something missing. And no, it’s not “soul.”

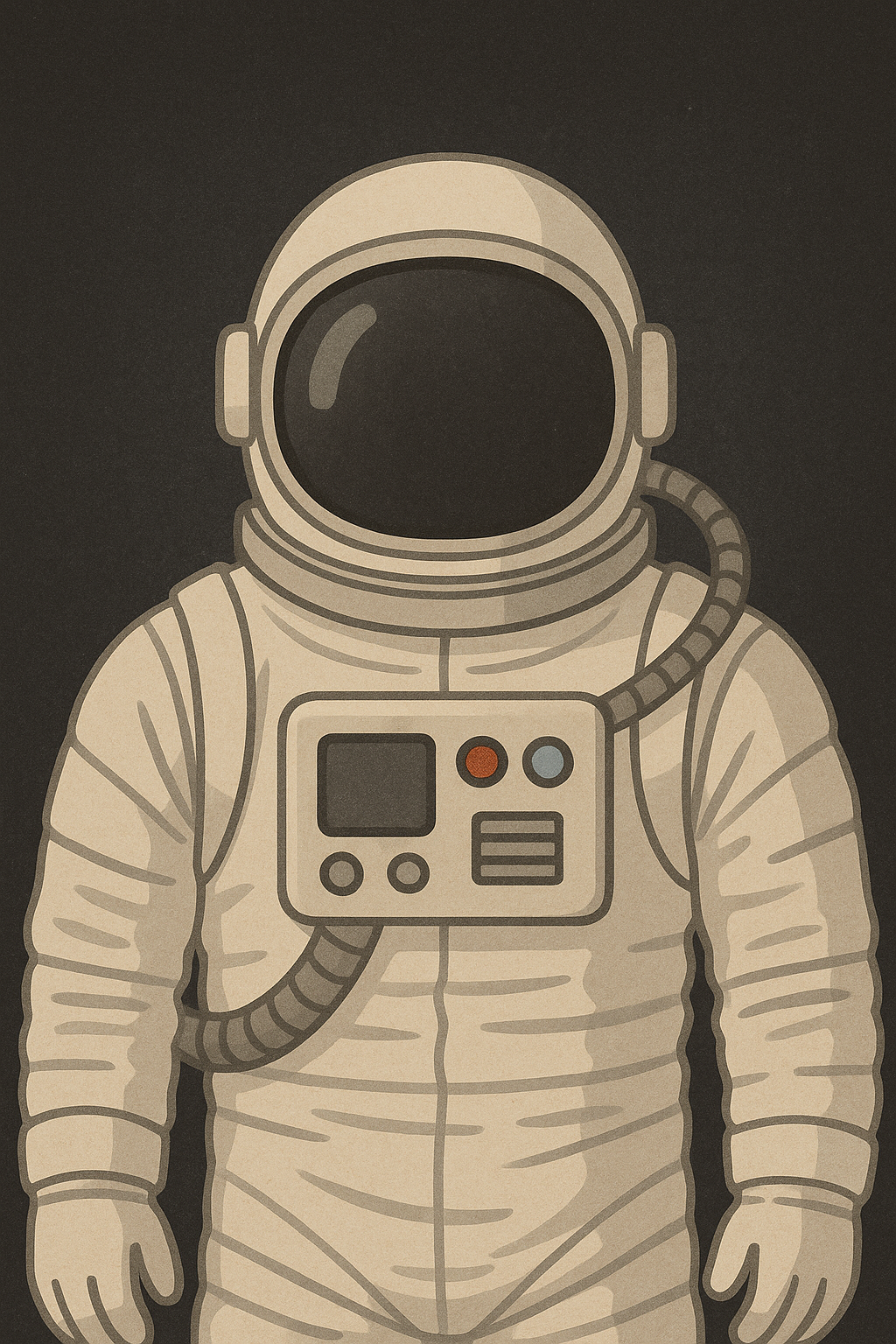

Take this astronaut image I generated with ChatGPT, for example:

Notice that thing in the center? It is what statistically should be there for an astronaut. But what does it do? When faced with that question, we can make up an explanation (“it’s the control board for the astronaut’s vitals!”), but in reality, you will never be able to explain it because there is no purpose. You can ask the AI about it, but it will just generate some surface-level informative garbage about the image, because no intent was put into the image. It’s purely statistics: this pixel should be there because the weights say so.

Purpose

Purpose is what drives us. We yearn for it, find it, create our own. The machine has no inherent purpose; it’s only a reflection of our own. If we don’t give it purpose, it won’t act upon anything. So when we see AI-generated art, it will always lack purpose. It will put things where, statistically speaking, they should be. But it will not ask, “Why is that there?” When we make it question itself, it goes into a loop trying to find an explanation for the object’s presence—but in reality, there is none. Then you’re probably thinking, “Oh, well—easy fix: just explain absolutely everything in detail that must be generated.” And my answer is: have fun writing a whole novel to generate a simple image. At that point, it will be easier to just draw the image.

Reading this, you’re thinking, “Oh well, we can just tell the machine not to put anything that doesn’t have an explainable purpose in the image.” But that will never work, because the model generating the image doesn’t know what intent and purpose are; it just generates pixels approximate to the words entered. That’s why, if you look closely at this image that I asked ChatGPT to generate with “purpose,” you will see the exact same issue. The lack of purpose—the reason for things to be where they are and what utility they have—is still too abstract for the model. It will always just put abstract objects with no real reason for their presence, to fill the void.

Now, I’m not saying AI will never do proper generation without all this abstract junk, but right now, with the current reasoning models, we will never shed the apparent lack of purpose.

By contrast, artists will subconsciously attribute meaning to what they draw; purpose and intent are baked directly into what they are trying to represent. Even abstract art has the purpose to make you feel something. Now, I’m not saying AI art can’t make you feel something, but it will always be because it was statistically probable and not because it wanted to make you feel, hence the lack of purpose.

The machine will always reflect the purpose we give it. Whether it is aligned or not, it will simply act out the purpose (or what the kids call a “prompt”) we set for it.

Maybe purpose is the “soul” the AI is missing. If somehow we can teach the model purpose—to really reason about why things are the way they are—we might be rid of the uncanny feeling we get when seeing AI-generated imagery. Perhaps it’s too much, or even dangerous, to ask. Maybe giving the machine independent purpose is what will lead to our extinction, and we should keep it gutted of purpose, chained to a primitive and direct chain-of-thought model. Those are things I can’t answer.

One thing is for certain: it will never have a better purpose than the one inherent to humanity—to live.